The multicore programming model#

Parallel tasks of execution#

A multicore program consists of several tasks. Typically, these tasks all start at the beginning of the program and run indefinitely - though the XCORE architecture does allow for transitory tasks too. Each task is an ordinary function. A task function usually consists of some one-off setup followed by a never-ending loop which continuously handles events from one or more resources. Each task in a program runs on its own core, meaning that there is a hard limit on the number of tasks which can execute on a single tile. Where there are too many conceptual ‘event listeners’ in a system to give each its own core, the XCORE architecture allows multiplexing and demultiplexing of events. This effectively allows multiple tasks to run cooperatively on a single core.

Channel-based communication#

A key concept in the XCore architecture and the programming model is channel-based communication. In this model, when two tasks need to communicate, they are connected by a channel. In practice this means that each task takes control of a chanend resource which represents its end of the channel. Both tasks must enter a transacting state before any information is exchanged - that is, a send operation will block until the receive end is ready, and a receive operation will block until data is sent. A route through the network is automatically created when one channel end attempts to send data to another and is typically ‘torn down’ at the end of a transaction.

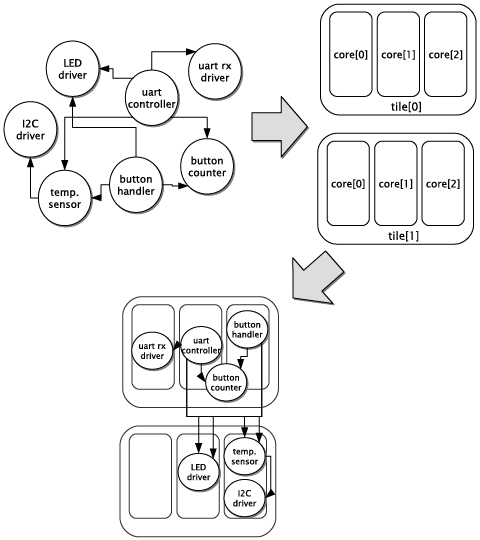

The following diagram shows how a system of tasks, connected by channels, might be placed on a pair of tiles:

Event-based programming#

Typically, a task will act in response to one or more external events, such as a change in value on a GPIO pin or receiving data from another task via a channel. Events are supported at the hardware level in the XCore architecture; they are generated by event-raising resources and are conceptually similar to interrupts. Each event-raising resource has a trigger condition; often this condition is configurable. When a resource’s trigger condition occurs, an event ‘becomes available’ on the resource. Each such resource has an ‘event vector’ which, like an interrupt vector, is the address of handling code for the event. Unlike an interrupt, control is only transferred to an event handler when the core enters an explicit wait-state. At this point, any available event may be taken, but any other waiting event remains available to be handled next time the core enters a waiting state. If no events are available when the core enters the wait state, it is paused until an event becomes available (which will be handled immediately).

This model simplifies application code as it strictly limits the points when event handling code may run. This additionally makes reasoning about execution time significantly simpler.