Programming an XCORE tile with C and lib_xcore¶

The XCORE compiler (xcc) supports targeting the XCORE using GNU C or C++. A

C platform library lib_xcore provides access to XCORE-specific hardware

features. Lib_xcore is available as a collection of system headers when using

the XCC C compiler; the names of the headers all begin xcore/.

Parallel execution¶

The following code listing shows a multicore “hello world” application using

lib_xcore. Note that, as usual, the program has a single main function as

its entrypoint - main starts on a single core and lib_xcore must be used to

start tasks on other cores.

1#include <stdio.h>

2#include <xcore/parallel.h>

3

4DECLARE_JOB(say_hello, (int));

5void say_hello(int my_id)

6{

7 printf("Hello world from %d!\n", my_id);

8}

9

10int main(void)

11{

12 PAR_JOBS(

13 PJOB(say_hello, (0)),

14 PJOB(say_hello, (1)));

15}

PAR_JOBS¶

1#include <stdio.h>

2#include <xcore/parallel.h>

3

4DECLARE_JOB(say_hello, (int));

5void say_hello(int my_id)

The PAR_JOBS macro, from xcore/parallel.h, is a lib_xcore construct

which makes two or more function calls, each on its own core. Each invocation of

the PJOB macro represents a task - the first argument is the function to

call, the second the argument pack to call it with. Note that a function called

by PAR_JOBS must be declared using DECLARE_JOB in the same translation unit

as the PAR_JOBS - the parameters are the name of the function and a pack of

typenames which represent the argument signature. Tasks always have a void

return type.

1 PAR_JOBS(

2 PJOB(say_hello, (0)),

3 PJOB(say_hello, (1)));

When PAR_JOBS is executed, each of the ‘PJobs’ is executed in parallel, with

each one executing on a different core of the current tile. There is an implicit

‘join’ at the end of this, meaning that execution does not continue beyond

PAR_JOBS until all the launched tasks have returned. Due to each PJob

running on its own core, at the point the PAR_JOBS executes there must be

enough free cores available to run all its jobs - this means that there is a

hard limit on the number of jobs which may run, which is the number of cores on

the tile. In the case that some cores are already busy running tasks, the number

of jobs which can run will be fewer. In the case that there are not enough cores

available, the PAR_JOBS will cause a trap at runtime.

Stack size calculation¶

In the previous examples, multiple tasks were launched by a single thread, and

yet it was not necessary to specify where the stacks for those threads should be

located. This is because the PAR_JOBS macro allocates a stack for each

thread launched, as a ‘sub stack’ of the launching thread’s stack. The size of

the stack allocated is calculated by the XMOS tools, which also ensure that the

stack allocated to the calling thread is sufficient for all its launched threads

and callees.

It is possible to view the amount of RAM which has been allocated to stacks

using the -report flag to the compiler:

$ xcc -target=XK-EVK-XU316 hello_world.c -report

Constraint check for tile[0]:

Memory available: 524288, used: 22408 . OKAY

(Stack: 1620, Code: 19528, Data: 1260)

Constraints checks PASSED.

To enable this calculation, the compiler adds special symbols for each function which describe their stack requirements. These symbols are detailed in the XCore ABI document. The tools are not always able to determine the stack requirement of a function - such as when it calls a function through a pointer, or when it is recursive. In the case that the compiler cannot deduce the stack size requirement of a function, it is necessary to provide a worst-case requirement manually. This is possible either by defining the symbols manually, or by annotating the code (this is discussed later in this document).

Hardware timers¶

Timers are a simple resource which can be read to retrieve a timestamp. Timers

can be configured with a trigger time, which causes the read operation to block

until that timestamp has been reached. This makes timers suitable for measuring

time as well as delaying by a known period. Timers can be accessed using

lib_xccore’s xcore/hwtimer.h - this provides a number of functions for

interacting with timer resources. Like all resource-related functions,

lib_xcore’s timers work on a resource handle. As timers are a pooled resource,

the XCore keeps track of available timers and allocates handles as required.

hwtimer_alloc allocates a timer from the pool and returns its handle. As

there is a limited number of timers available, the allocation can fail - in

which case it will return 0.

1#include <stdio.h>

2#include <xcore/hwtimer.h>

3

4int main(void)

5{

6 hwtimer_t timer = hwtimer_alloc();

7

8 printf("Timer value is: %ld\n", hwtimer_get_time(timer));

9 hwtimer_delay(timer, 100000000);

10 printf("Timer value is now: %ld\n", hwtimer_get_time(timer));

11

12 hwtimer_free(timer);

13}

14

Channel communications¶

The standard way of allowing tasks to communicate is to use the XCORE’s ‘chanend’ resources to send data through the communication fabric. This has the advantage of allowing synchronised transactions even with tasks running on a different tile or package.

Channels¶

Lib_xcore provides a channel type in xcore/channel.h which is used to

establish a channel between two tasks on the current tile. The following code

listing is a program where the sends_first task sends one word of data to

receives_first, which responds with a single byte. In this program,

channel_alloc is called to establish a channel which can be used by two

tasks to communicate. Notice that the returned channel_t is simply a

structure containing the two ends of the channel. The channel is created before

the tasks are started, and each task is passed a different end of the channel.

1#include <stdio.h>

2#include <xcore/channel.h>

3#include <xcore/parallel.h>

4

5DECLARE_JOB(sends_first, (chanend_t));

6void sends_first(chanend_t c)

7{

8 chan_out_word(c, 0x12345678);

9 printf("Received byte: '%c'\n", chan_in_byte(c));

10}

11

12DECLARE_JOB(receives_first, (chanend_t));

13void receives_first(chanend_t c)

14{

15 printf("Received word: 0x%lx\n", chan_in_word(c));

16 chan_out_byte(c, 'b');

17}

18

19int main(void)

20{

21 channel_t chan = chan_alloc();

22

23 PAR_JOBS(

24 PJOB(sends_first, (chan.end_a)),

25 PJOB(receives_first, (chan.end_b)));

26

27 chan_free(chan);

28}

This approach has the advantage that the two tasks are decoupled - as long as they implement the same application-level protocol, neither one needs knowledge of what it is communicating with or where the other end of the channel is executing within the network.

Within the tasks, chan_ functions are used to send and receive data. These

functions synchronise the tasks since chan_out_word will block until

chan_in_word is called in the other task, and vice versa. It is imperative

that the correct corresponding ‘receive’ function is called for each ‘send’

function invocation, otherwise the tasks will usually deadlock or raise a

hardware exception.

Streaming channels¶

Regular channels implement handshaking for each send/receive pair. This has the advantage that it synchronises the participating threads, and additionally prevents tasks progressing if more or less data is sent than was expected by the receiving end. Whilst handshaking is desirable in most cases, it does incur a runtime penalty - in the time taken to perform the actual handshake, and often by the sending task being held up by synchronisation.

Streaming channels are provided to address this. For each chan_ function in

xcore/channel.h, there is a corresponding s_chan_ function in

xcore/channel_streaming.h. These have the same functionality as their

non-streaming counterparts, except that handshaking is not performed. Each

chanend has a buffer which can hold at least one word of data. Sending data over

a steaming channel only blocks when there is insufficient space in the buffer

for the outgoing data.

Routing capacity¶

The communication fabric within and between XCore packages has a limited capacity meaning that there is a limit to the number of routes between channel ends which can be in use at any given time. If no capacity is available in the network when a task attempts to send data, that task is queued until capacity becomes available. Regular channels establish and ‘tear down’ a route through the network on each transaction, as a side-effect of the handshake. This means that routes remain open for very limited times, and all tasks have an opportunity to utilise the network. However, as streaming channels do not perform handshaking, their routes remain open for the entire duration between their allocation and deallocation. If too many streaming channels are left open, this can starve other tasks of access to the network (this includes tasks using non-streaming channels). For this reason, it is advisable to leave streaming channels allocated for as little time as possible.

Basic port I/O¶

Ports are a resource which allow interaction with the external pins attached to an XCore package. Each tile has a variety of ports of different widths; these will often have pins in common, and the actual mapping of ports to pins varies by package. Ports are extremely flexible and can be used to implement I/O peripherals as software tasks.

Unlike pooled resources, ports are not allocated by the XCore (as a port mapped

to the desired pins will always be required). Instead, port handles are

available from platform.h and have the form XS1_PORT_<W><I> where

<W> is the width of the port in bits, and <I> is a single letter to

differentiate the port from others of the same width. E.g. XS1_PORT_16A is

the first 16 bit port. As ports are not managed by a pool, they must be enabled

explicitly before use, and disabled when no longer required.

Like timers, Ports have a configurable trigger which, when set, causes reading

the port to become blocking until the trigger condition is met. For example, it

is possible to configure a port so that input is blocking until its pins read a

particular value. By default, this trigger persists so input will be

non-blocking whilst the condition is met but will become blocking again once the

trigger condition becomes false. Functions for interacting with ports -

including input, output and configuring triggers - are available from

xcore/port.h.

The following program lights an LED while a button is held. Each time the port is read, its trigger condition is updated to occur when its value is not equal to its current one - this means the port is only read once each time the value on its pins changes.

1#include <platform.h>

2#include <xcore/port.h>

3

4int main(void)

5{

6 port_t button_port = XS1_PORT_4D,

7 led_port = XS1_PORT_4C;

8

9 port_enable(button_port);

10 port_enable(led_port);

11

12 while (1)

13 {

14 unsigned long button_state = port_in(button_port);

15 port_set_trigger_in_not_equal(button_port, button_state);

16 port_out(led_port, ~button_state & 0x1);

17 }

18

19 port_disable(led_port);

20 port_disable(button_port);

21}

Event handling¶

Events are an important concept in the XCore architecture as they allow resources to indicate that they are ready for an input operation. Lib_xore provides a select construct for waiting for an event and running handling code when it occurs.

1#include <platform.h>

2#include <xcore/port.h>

3#include <xcore/select.h>

4

5int main(void)

6{

7 port_t button_port = XS1_PORT_4D,

8 led_port = XS1_PORT_4C;

9

10 port_enable(button_port);

11 port_enable(led_port);

12

13 SELECT_RES(

14 CASE_THEN(button_port, on_button_change))

15 {

16 on_button_change: {

17 unsigned long button_state = port_in(button_port);

18 port_set_trigger_in_not_equal(button_port, button_state);

19 port_out(led_port, ~button_state & 0x1);

20 continue;

21 }

22 }

23

24 port_disable(led_port);

25 port_disable(button_port);

26}

The above application shows a lib_xcore SELECT_RES, from xcore/select.h,

which handles a single event. This is equivalent the example in

Basic port I/O, except that the port_in will not

block since it is not executed until the port’s trigger condition is met

(instead, the thread will block at the start of the SELECT_RES).

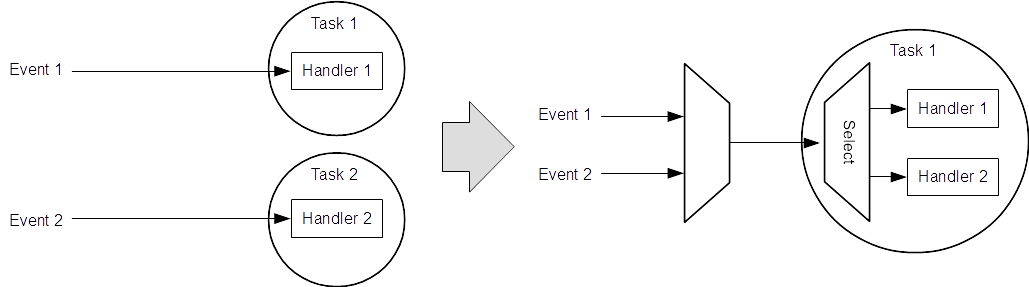

In this example, and previous examples in this document, the task blocks waiting for a single resource. Conceptually this is often a good design, and is the model generally encourage in the programming model. However, this is generally not an effective use of the available cores - most tasks will be paused in a wait state most of the time. It is also possible that a task needs to be able to accept input from multiple resources (e.g. a ‘collector’ task which listens to multiple channels).

For these reasons, the XCore allows a task to configure multiple resources to generate events, and then wait for any one of those events to occur. The lib_xcore select construct supports this - making it analogous to a Unix select. Conceptually, the XCore multiplexes events which are of interest to a task, and the select demultiplexes them.

The following listing introduces a timer which allows the LED to flash whilst the button is held. If an event occurs whilst another is being handled, the later event remains available on its respective resource and will be handled once the running handler continues.

1#include <platform.h>

2#include <xcore/hwtimer.h>

3#include <xcore/port.h>

4#include <xcore/select.h>

5

6int main(void)

7{

8 port_t button_port = XS1_PORT_4D,

9 led_port = XS1_PORT_4C;

10

11 hwtimer_t timer = hwtimer_alloc();

12 port_enable(button_port);

13 port_enable(led_port);

14

15 int led_state = 0;

16 unsigned long button_state = port_in(button_port);

17 port_set_trigger_in_not_equal(button_port, button_state);

18

19

20 SELECT_RES(

21 CASE_THEN(button_port, on_button_change),

22 CASE_THEN(timer, on_timer))

23 {

24 on_button_change:

25 button_state = port_in(button_port);

26 port_set_trigger_value(button_port, button_state);

27 continue;

28

29 on_timer:

30 hwtimer_set_trigger_time(timer, hwtimer_get_time(timer) + 20000000);

31 if (~button_state & 0x1) {

32 led_state = !led_state;

33 port_out(led_port, led_state);

34 }

35 continue;

36 }

37

38 port_disable(led_port);

39 port_disable(button_port);

40 hwtimer_free(timer);

41}

The SELECT_RES macro takes one or more arguments, which must be expansions

of ‘case specifiers’ from the same header. The most common case specifier is

CASE_THEN. This takes two parameters: the first being a resource to wait for

an event on, and the second a label within the SELECT block to jump to when

the event occurs. On entry to the SELECT_RES the task enters a wait state,

when an event becomes available on one of the specified resources control is

transferred to the label specified as the handler. Each handler must end with

either break or continue; break causes control to jump the point

after the SELECT block, whereas continue returns to the waiting state to

handle another event.

In the preceding example the timer’s trigger is adjusted into the future each

time it is reached, causing it to trigger indefinitely at regular intervals.

During the handler for the timer event the LED state is toggled only if the

button is currently held. This structure means that the task will spend most of

the time waiting for either of the two possible events. However, the timer event

still occurs even when the button isn’t held - so the handler runs even though

it won’t have any effect. The select construct has the facility to conditionally

mask events which aren’t always of interest - this is called a ‘guard

expression’. In the following listing, the guard expression prevents the

on_timer event from being handled until its condition is met. The guard

expression is re-evaluated each time the SELECT enters its wait state; if an

event has occurred whilst ‘masked’ by a guard expression, it will be handled

once its condition is re-evaluated and true.

1#include <platform.h>

2#include <xcore/hwtimer.h>

3#include <xcore/port.h>

4#include <xcore/select.h>

5

6int main(void)

7{

8 port_t button_port = XS1_PORT_4D,

9 led_port = XS1_PORT_4C;

10

11 hwtimer_t timer = hwtimer_alloc();

12 port_enable(button_port);

13 port_enable(led_port);

14

15 int led_state = 0;

16 unsigned long button_state = port_in(button_port);

17 port_set_trigger_in_not_equal(button_port, button_state);

18

19

20 SELECT_RES(

21 CASE_THEN(button_port, on_button_change),

22 CASE_GUARD_THEN(timer, ~button_state & 0x1, on_timer))

23 {

24 on_button_change:

25 button_state = port_in(button_port);

26 port_set_trigger_value(button_port, button_state);

27 continue;

28

29 on_timer:

30 hwtimer_set_trigger_time(timer, hwtimer_get_time(timer) + 20000000);

31 led_state = !led_state;

32 port_out(led_port, led_state);

33 continue;

34 }

35

36 port_disable(led_port);

37 port_disable(button_port);

38 hwtimer_free(timer);

39}

Advanced port I/O¶

Ports are extremely flexible and ‘soft-peripheral’ implementations can often delegate significant portions of their work to them; this can drastically improve responsiveness. In particular, ports can be connected to configurable Clock Block resources which can be used to drive timings of port operations. Documenting the full capabilities and interfaces of ports and clock blocks is not within the scope of this document. Further information about these resources can be found in the lib_xcore port and clock APIs, and in the in the XCore instruction set documentation.

Guiding stack size calculation¶

As shown earlier in this document, the XMOS tools are able to calculate stack size requirements for many C/C++ functions.

Generally a function’s stack requirement is calculable when its own frame is a fixed size and it only calls functions whose stack sizes are also calculable.

Function pointer groups¶

One obvious case where it is not possible to determine a function’s stack requirement is when it makes a call through a function pointer. It is usually impossible to automatically determine all functions which a given pointer may point to. For this reason, the XMOS tools allow indirect call sites to be annotated with a function pointer group. Functions can also be annotated with one or more groups to which they should belong. When the stack size calculator encounters an indirect call through an annotated function pointer, it takes the stack size requirement to be that of the hungriest callee in pointer’s group.

The following snippet declares a function pointer fp which is a member of

the group named my_functions:

__attribute__(( fptrgroup("my_functions") )) void(*fp)(void);

When a call through such a pointer appears in a function, that function’s stack

size will include a sufficient allocation for a call to any function which has

been placed in my_functions. Pointer groups are empty until functions are

added to the explicitly, so such a call is dangerous until all possible callees

have been annotated with the correct group. In the following snippet, func1

is annotated so that it is a member of the group my_functions:

_attribute__(( fptrgroup("my_functions") ))

void func1(void)

{

}

Explicitly setting stack size¶

In some cases it is necessary to explicitly set the stack size allocated for a function. This may be because a function does not have a fixed requirement (e.g. where a variable length array is used) or might be desirable when heavy use of indirect calls makes annotation infeasible.

In these cases it is possible to specify the number of words which should be

allocated for calls to a particular function; the following snippet is an

assembly directive which sets the stack requirement for a function named

task_main to 1024 words:

.globl task_main.nstackwords

.set task_main.nstackwords,1024

Note that this is simply defining a symbol (whose name is based on the function name) which would be defined by the compiler for a calculable function.

This code can be assembled and linked as a separate object, or can be passed to

xcc as an additional input file when compiling C/C++ code.

A limited set of binary operators is available for expressing stack size requirements; often this is useful for setting a function’s requirements in terms of functions it calls. The available operators are:

+and*$M- evaluates to the greater of its two operands (useful for finding the hungriest of a list of functions)$A- evaluates to its left operand rounded up to the next multiple of the right operand (useful for rounding up to the stack alignment requirement)

Parentheses (( and )) are also available.

The following directives set task_main’s stack requirement to 1024 words

plus the greater of the requirements of my_function0 and my_function1,

all rounded to double word alignment:

.globl task_main.nstackwords

.set task_main.nstackwords, (1024 + (my_function0.nstackwords $M my_function1.nstackwords )) $A 2

When stack sizes are set this way, the compiler will still emit warnings for

unannotated calls through pointers; this can be suppressed with the

-Wno-xcore-fptrgroup option.

Care should be taken when manually setting stack requirements - a function’s

allocation must be sufficient for its ‘local’ usage as well as all functions it

calls and threads it launches using PAR_JOBS.